[Closed] Kinect Fusion Scanner in 3Ds MAX

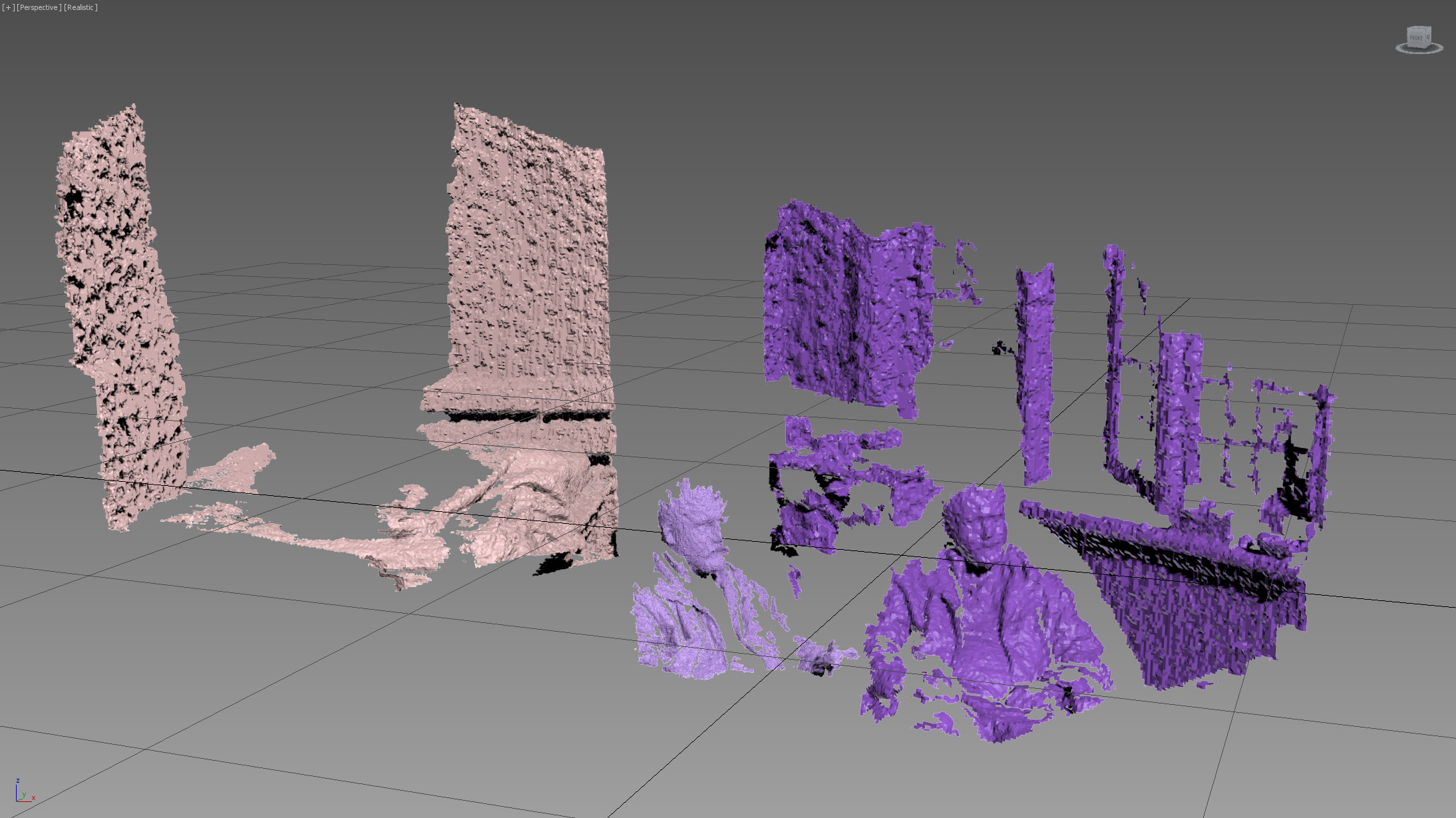

I spent a bit of time today playing around with the Kinect scanner in dotNet & mxs. Despite the below results being quite rough, this is because I only got as far as single snaps. If you’re familiar with the Kinect Fusion samples; they refine detail greatly over multiple shots … and the GPU powered point cloud merging is all exposed via dotNet. Since I was just toying around I thought I’d post the script here for others interest.

Requirements:

[ul]

[li]3Ds MAX ~2013[/li][li]A Kinect[/li][li]Kinect SDK 1.7+[/li][li]getFiles @”C:\ – this portion correctly pathed if you don’t install to C:[/li][li]this file KinectFusion170_64.dll in W:\Program Files\Microsoft SDKs\Kinect\Developer Toolkit v1.7.0\Samples\bin\ placed in your root 3Ds MAX folder[/li][/ul]

--Loading required classed and assemblies

for assembly in #(@"Microsoft.Kinect.dll") + getFiles @"C:\Program Files\Microsoft SDKs\Kinect\Developer Toolkit v1.7.0\Assemblies\*" do dotnet.loadAssembly assembly

FusionReconstruction = dotNetClass "Microsoft.Kinect.Toolkit.Fusion.Reconstruction"

FusionFloatImageFrame = dotNetClass "Microsoft.Kinect.Toolkit.Fusion.FusionFloatImageFrame"

FusionDepthProcessor = dotNetClass "Microsoft.Kinect.Toolkit.Fusion.FusionDepthProcessor"

kinectSensor = dotNetClass "Microsoft.Kinect.KinectSensor"

rollout kinectScanner "Kinect Fusion Scanner"

(

--required variables

local mySensor,kinectMesh,kinectVertices

local maxVertices,maxFaces

local FusionFrame = dotNetObject FusionFloatImageFrame 640 480

local KinectFrame = dotNetObject "Microsoft.Kinect.DepthImagePixel[]" 307200

local matrix4 = dotnetclass "microsoft.kinect.matrix4"

local volParam = dotnetobject "Microsoft.Kinect.Toolkit.Fusion.ReconstructionParameters" 128 384 384 384

--arguments:

--voxels per meter

--resolution x \ y \ z

local theProcessor = dotNetClass "Microsoft.Kinect.Toolkit.Fusion.ReconstructionProcessor"

local matrix4 = dotnetclass "microsoft.kinect.matrix4"

local volume = FusionReconstruction.FusionCreateReconstruction volParam theProcessor.amp -1 (Matrix4.identity)

local framesCompleted = 0

--fn definitions

fn OpenNextFrame args =--puts depth data to FusionFrame variable

(

framesCompleted += 1

local result = args.OpenDepthImageFrame()

if classof result == dotnetobject then

(

result.CopyDepthImagePixelDataTo KinectFrame

FusionDepthProcessor.DepthToDepthFloatFrame KinectFrame 640 480 FusionFrame 0.35 8 false

--arguments:

--simple depth greyscale image

--x resolution

--y resolution

--fusion frame storage; can be used to create multiple meshes

--min meters

--max meters

--flip result on x

)

)

fn stopSensor theSensor =

(

theSensor.DepthStream.disable()

dotNet.removeAllEventHandlers theSensor

theSensor.stop()

format "

Finished; % frames

" framesCompleted

framesCompleted = 0

mySensor.DepthStream.IsEnabled

)

fn startSensor =

(

mySensor = kinectSensor.kinectsensors.item[0]

dotNet.addEventHandler mySensor "DepthFrameReady" OpenNextFrame

mySensor.start()

mySensor.DepthStream.enable()

format "

Scanner enabled; %" mySensor.DepthStream.IsEnabled

mySensor.DepthStream.IsEnabled

)

button btnScan "Scan!" enabled:false

on btnScan pressed do

(

cameraTransform = volume.GetCurrentWorldToCameraTransform()

volume.ProcessFrame fusionFrame 1 128 cameraTransform--at this point you can add multiple frames, to the same mesh

kinectMesh = volume.CalculateMesh 1--arg == density

kinectVertices = kinectMesh.getVertices()

maxVertices = for vertINT = 0 to kinectVertices.count - 1 collect [kinectVertices.item[vertINT].x,kinectVertices.item[vertINT].y,kinectVertices.item[vertINT].z]

maxFaces = for vertINT = 1 to kinectVertices.count by 3 collect [vertINT,vertINT + 1,vertINT + 2]

mesh vertices:maxVertices faces:maxFaces

)

checkbutton btnEnable "enable Sensor"

on btnEnabled changed changedTo do btnEnable.checked = btnScan.enabled = if changedTo then startSensor() else stopSensor mySensor

on kinectScanner open do

(

btnEnable.checked = btnScan.enabled = startSensor()

--Interesting properties:

--showproperties kinectScanner.mySensor.depthStream.Format -- colorStream has the same

)

on kinectScanner close do stopSensor mySensor

)

createDialog kinectScanner

if false then kinectScanner.stopSensor kinectScanner.mySensor --shut down kinect ; in case of errors!

Note that the walls are stucco; I think contributing to the roughness.

The variation in results comes from changing the arguments in this line;

local volParam = dotnetobject “Microsoft.Kinect.Toolkit.Fusion.ReconstructionParameters” 128 384 384 384

Thats pretty cool. I’d didn’t realize you can run Kinect Fusion Directly from Max.

I’m glad someone found it interesting. It’s not really that hard to imagine producing this in a way that is superior to the Fusion samples…